In this article, we’ll be exploring MLOps. By way of definition, MLOps is all about managing and automating the entire machine learning development process. From data management and preprocessing to model deployment and maintenance, we’ll cover it all.

We’ll discuss the key components of MLOps, including data versioning, data validation, model training, and testing. We’ll also explore the skills required for MLOps and the future of this exciting field. Whether you’re an experienced data scientist or just starting out, this article is a great introduction to the world of MLOps.

Also, if you’re interested in a deep dive on MLOps, we suggest downloading our MLOps whitepaper. You can follow this link to get it for free.

What Is MLOps?

MLOps, or Machine Learning Operations, is a collection of practices and tools that help teams in a company collaborate to manage the entire process of creating and using machine learning models. This includes everything from preparing data to deploying and monitoring the model in production.

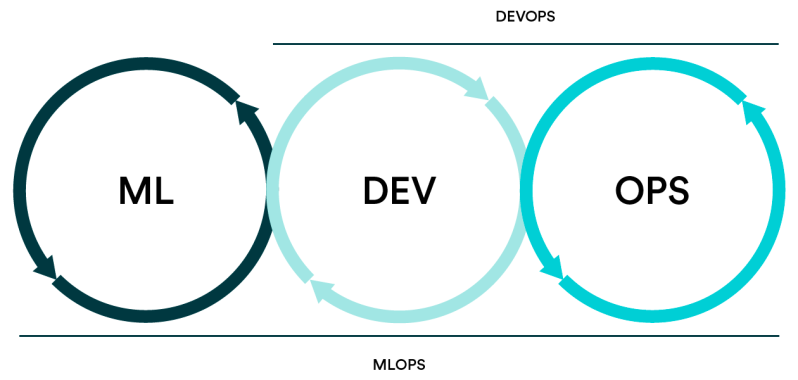

MLOps is designed to make the process of developing and using machine learning models more efficient and reliable. By using techniques from DevOps, like continuous integration and version control, MLOps helps teams to work together more effectively and automate many of the repetitive tasks involved in machine learning development.

So why should you adopt MLOps in your machine learning development process? The answer is that implementing MLOps practices can help companies to save time and resources, improve the accuracy and reliability of their models, and ensure that they meet legal and regulatory requirements.

MLOps vs. DevOps

Because of its focus on machine learning models, MLOps workflows may require specialized tools and techniques that are not typically used in traditional DevOps workflows. For example, MLOps may require data management tools that can handle large datasets or specialized model training and validation frameworks.

The complexity of MLOps workflows can also be challenging, as machine learning models and data can be more difficult to work with than traditional software systems. This means that MLOps professionals need some expertise in machine learning algorithms, data management, and model validation and testing.

CI/CD - Continuous Integration/Continuous Deployment

In simpler terms, helps ensure that new code changes are integrated and deployed quickly and reliably. This is done by using automated tests and checks that are run on the code changes before they are integrated into the main codebase and deployed to production.

What’s Included in the MLOps Workflow?

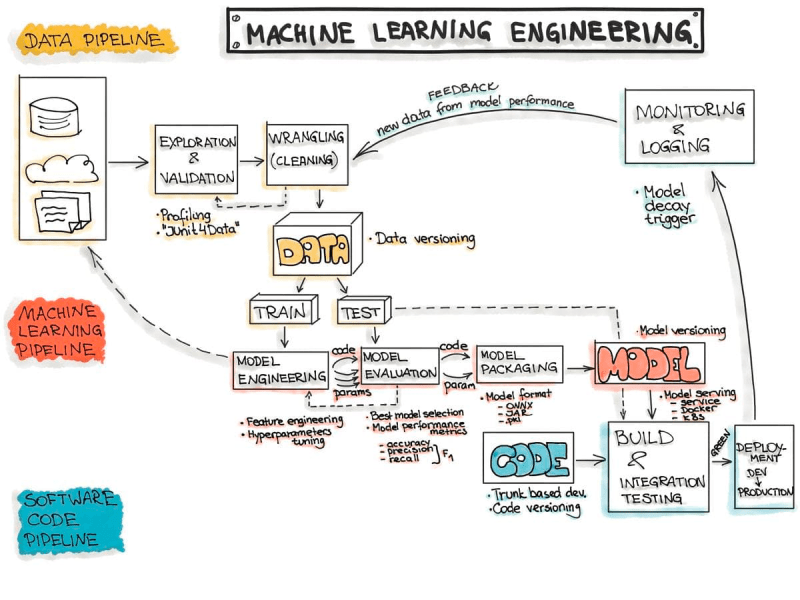

Data Management

1. Data Versioning

Data versioning is particularly essential for machine learning models because it ensures the reliability and consistency of the data used to train them. To do data versioning well, you should use clear version names, keep detailed documentation of all changes made, and use a version control system that integrates with your MLOps workflow to keep everything organized and accessible.

2. Data Validation

Data validation involves ensuring that your data is accurate, complete, and representative of the real-world phenomenon being modeled. Without proper validation, your models may produce unreliable or inaccurate results, which can be problematic in real-world applications. While data validation can be time-consuming and tedious, it’s necessary to ensure that your models are based on reliable data and produce accurate results. Best practices like comparing data to external benchmarks and using automated tools can help simplify the process.

3. Data Pre-Processing

Data pre-processing involves transforming raw data into a format that’s more suitable for use in machine learning models. Techniques like scaling, normalization, feature extraction, and data imputation help to ensure that data is accurate, complete, and consistent. Despite being time-consuming, data preprocessing is essential for building accurate and reliable machine-learning models.

Model Development

1. Model Versioning

Model versioning is all about saving and keeping track of all the different versions of your model, including working and non-working versions. Having a versioning system helps to ensure the reproducibility and reliability of your model (similar to data versioning). You can easily revert back to a previous version of the model and compare the performance of different versions. This enables you to identify and fix errors or issues, ensuring that your model produces accurate and reliable results over time. This is also quite useful since data scientists typically need to perform various different experiments, and they need to be able to tell those different experiments apart.

2. Model Training

Proper model training can make all the difference in the accuracy and reliability of your machine-learning models. By using a high-quality dataset and choosing the right algorithm and hyperparameters, you can ensure that your model is optimized for the task at hand.

3. Hyperparameter Optimization

Getting the right hyperparameters can make a big difference in the accuracy and reliability of your models. You don’t want to overfit or underfit your model, which can lead to incorrect results. So, by taking the time to fine-tune the hyperparameters, you can help ensure that your model performs as accurately and reliably as possible.

There are several techniques you can use to optimize hyperparameters, like grid search, random search, and Bayesian optimization. These techniques help you explore the different options and identify the best combination for your specific problem.

Model Testing

1. Continuous Integration

By integrating changes on a regular basis, you can catch any issues or errors early on in the development process. This is super important because it can save a lot of time and effort down the line. Plus, automated testing frameworks and version control systems can make the process much easier and more efficient.

2. Model Evaluation Metrics

By evaluating your model properly, you can make sure that it’s actually good at the task you want it to perform. You can use different metrics and test them on diverse sets of data to see how well it performs in different scenarios.

It’s also important to keep evaluating your model over time. This lets you catch any issues or errors that might pop up and fine-tune the model for even better performance.

3. Model Validation

Overfitting is a common problem in machine learning where the model becomes too complex and doesn’t perform well on new data. Model validation helps to prevent this by testing the model on data that it hasn’t seen before.

Getting model validation right is super important. By using the right validation techniques and testing on diverse datasets, you can make sure your model is optimized for the task at hand and can handle new data.

Model Deployment

1. Deployment Strategies

When it comes to deploying machine learning models, there are several strategies available. You can deploy models on your own hardware (on-premises), on cloud-based platforms like AWS or Google Cloud, or as a web service accessed through an API. Each option has its pros and cons, such as control versus resource requirements or cost-effectiveness versus additional development work. It’s essential to consider your specific needs and resources when deciding on the best deployment strategy for your machine learning model.

2. Model Monitoring

3. Model Scalability

Model scalability refers to the ability of a machine learning model to handle increasing amounts of data and requests without sacrificing performance. As the amount of data and requests increases, the model should be able to handle them without slowing down or crashing.

Model Maintenance

1. Continuous Delivery

Continuous delivery ensures the ongoing maintenance and optimization of your machine-learning models. It involves automating the deployment of updates and changes to the model, allowing for quick and efficient maintenance.