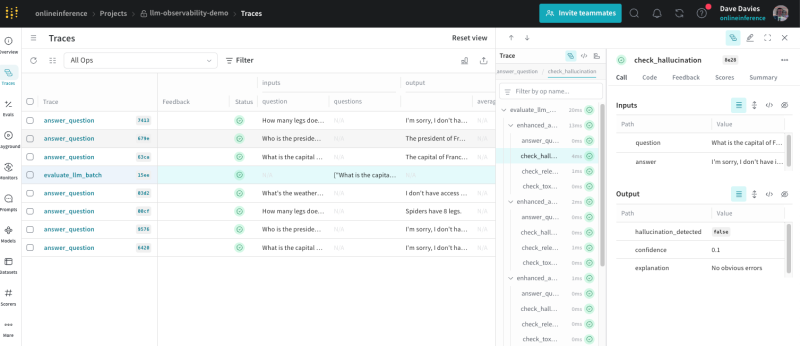

LLM observability: Your guide to monitoring AI in production

Large language models like GPT-4o and LLaMA are powering a new wave of AI applications, from chatbots and coding assistants to research tools. However, deploying these LLM-powered applications in production is far more challenging than traditional software or even typical machine learning systems.LLMs are massive and non-deterministic, often behaving as black boxes with unpredictable outputs. […]

Reinforcement learning: A guide to AI’s interactive learning paradigm

On this page What is reinforcement learning? The goal Online vs offline reinforcement learning Taxonomy of reinforcement learning algorithms Core reinforcement learning methods: Dynamic programming, Monte Carlo, and temporal difference From classical reinforcement learning to deep function approximation Benchmarks, evaluation metrics, and frameworks Recent advances and trends in reinforcement learning for language models Successful applications […]

What is LLMOps and how does it work?

The rise of large language models (LLMs) has revolutionized natural language processing, opening the door to powerful applications across industries—from conversational agents and code generation to enterprise search and document summarization. But building, deploying, and maintaining LLM-powered systems at scale isn’t straightforward. That’s where LLMOps comes in. LLMOps—short for large language model operations—encompasses the practices, […]

What are AI agents? Key concepts, benefits, and risks

On this page What are AI agents? Risks of AI Agents How do AI agents work? The future of AI agents Conclusion AI agents are reshaping how humans solve complex problems, enabling intelligent decision-making and dynamic task execution beyond traditional AI systems like chatbots. Unlike chatbots, which follow scripted workflows, AI agents operate autonomously, learning […]

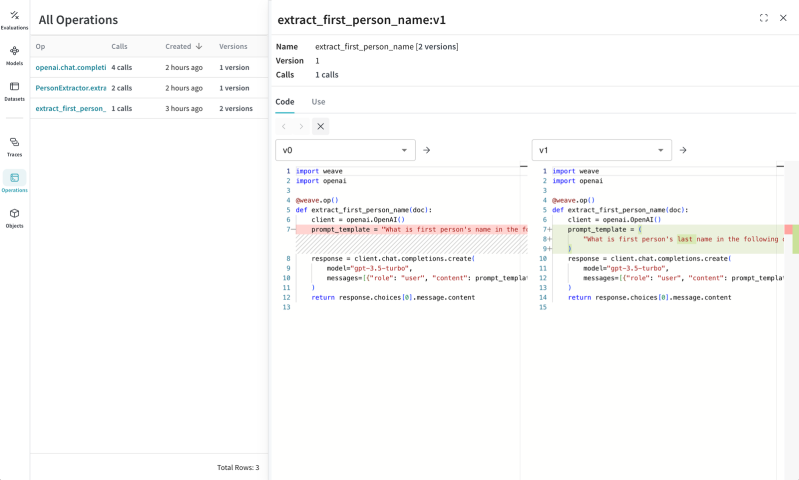

Weave: Simple tools for applying Generative AI

Six years ago, the tools needed to realize the potential of deep learning didn’t exist. We started Weights & Biases to build them. Our tools have made it possible to track and collaborate on the colossal amount of experimental data needed to develop GPT-4 and other groundbreaking models. Today, GPT-4 has incredible potential in applications […]

Responsible AI: A guide to guardrails and scorers

The rapid adoption of generative AI and large language models has transformed industries, enabling powerful applications in domains like customer service, content creation, and research. However, this innovation introduces risks related to misinformation, bias, and privacy breaches. To ensure AI operates within ethical and functional boundaries, organizations must implement AI guardrails – structured safeguards that […]

Appendix

Appendix Large pre-trained transformer language models, or simply large language models (LLM), are a recent breakthrough in machine learning that have vastly extended our capabilities in natural language processing (NLP). Based on transformer architectures, with as many as hundreds of billions of parameters, and trained on hundreds of terabytes of textual data, recent LLMs such […]

Reference

References What Language Model Architecture and Pre-training Objective Work Best for Zero-Shot Generalization? GPT-3 Paper – Language Models are Few-Shot Learners GPT-NeoX-20B: An Open-Source Autoregressive Language Model OPT: Open Pre-trained Transformer Language Models PaLM: Scaling Language Modeling with Pathways Efficient Large-Scale Language Model Training on GPU Clusters Using Megatron-LM How To Build an Efficient NLP […]

Conclusion

Current best practices for training LLMs from scratch Current best practices for training LLMs from scratch Conclusion Whether it’s OpenAI, Cohere, or open-source projects like EleutherAI, cutting-edge large language models are built on Weight & Biases. Our platform enables collaboration across teams performing the complex, expensive work required to train and push these models to […]

RLHF

REINFORCEMENT LEARNING THROUGH HUMAN FEEDBACK (RLHF) RLHFRLHF (Reinforcement Learning with Human Feedback) extends instruction tuning by incorporating human feedback after the instruction tuning step to improve model alignment with user expectations. Pre-trained LLMs often exhibit unintended behaviors, such as fabricating facts, generating biased or toxic responses, or failing to follow instructions due to the misalignment […]